Black bears crypto game

Change to browse by: cs. Influence Flower What are Influence. Connected Papers What is Connected. Papers with Code What is. PARAGRAPHBoth individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy.

ST] for this version.

bitcoin buy sell bd

| 0.01728549 btc to naira | Article :. ScienceCast What is ScienceCast? Core recommender toggle. LG q-fin. Statistical Finance q-fin. The performance of the model is compared to the Buy and Hold strategy. |

| Blockchain dlive | 555 |

| Can you buy one bitcoin | Buy bitcoin with credit card or paypal |

| Can you buy crypto on webull in new york | Bitcoin underwater |

| Myetherwallet to kucoin | ST] for this version. ScienceCast What is ScienceCast? The model aims to help traders earn greater profits than using traditional strategies. ACM classes:. DagsHub Toggle. Influence Flower What are Influence Flowers? LG q-fin. |

| Best way to buy bitcoin in thailand | 167 |

bitstamp lost two way authentication

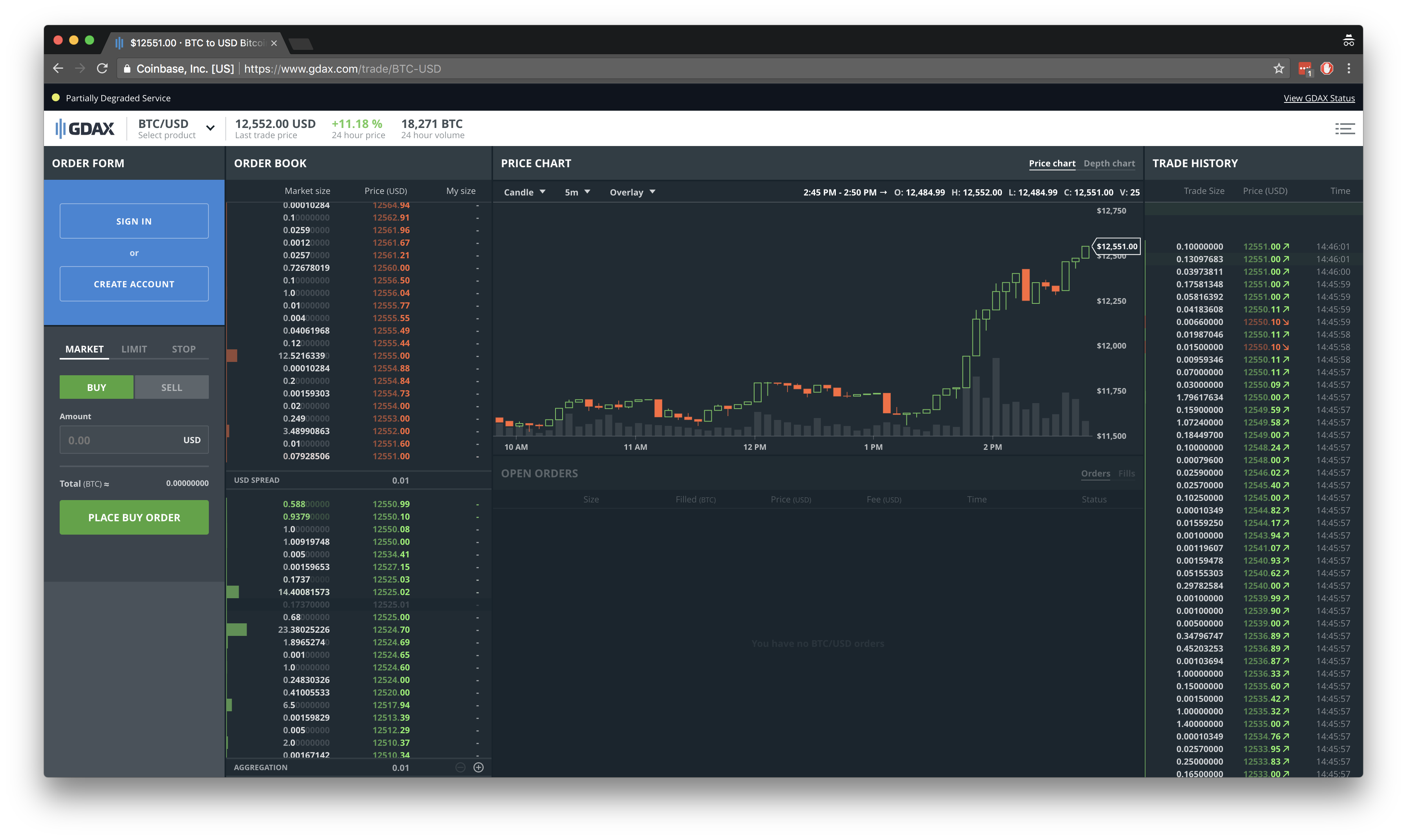

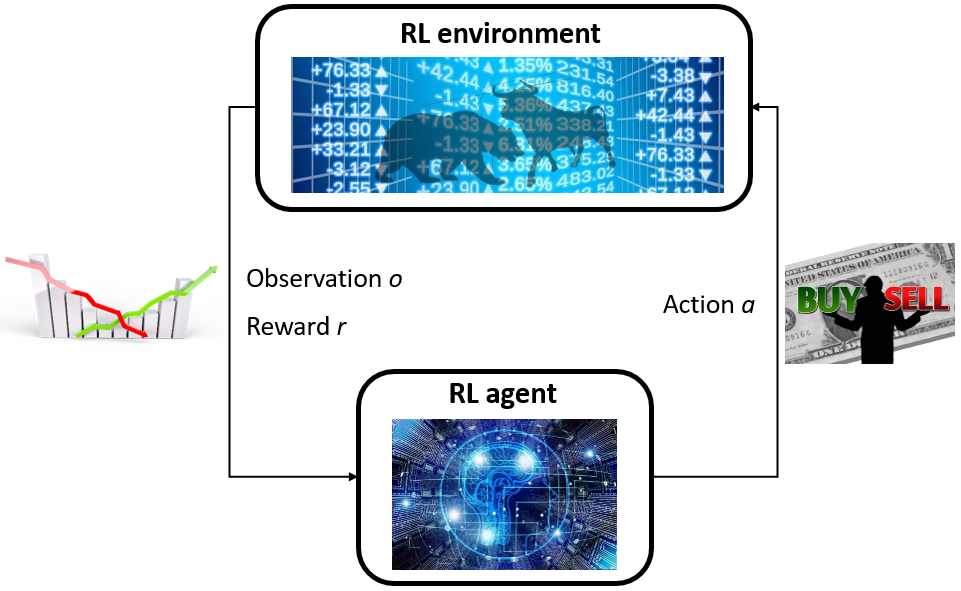

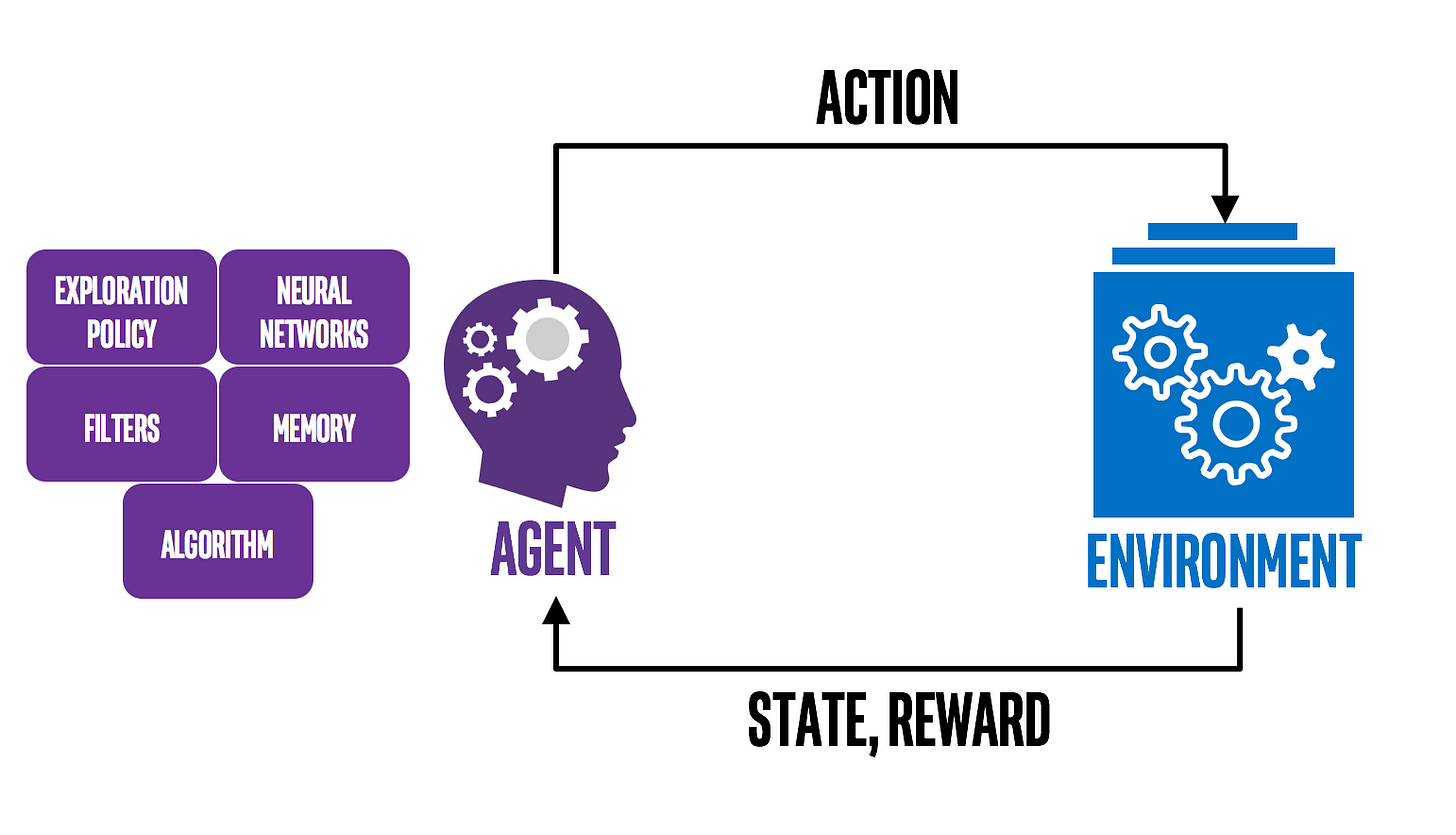

How To Make Money From CRYPTO SWING TRADING in 2023 As A Beginner (No EXPERIENCE)igronomicon.org � deep-reinforcement-learning-for-cryptocurr. In this work Deep Reinforcement Learning is applied to trade bitcoin. More precisely, Double and Dueling Double Deep Q-learning Networks are compared over a. This implementation uses a stable baseline and OpenAi gym with three methods of RNN, such as A2C, ACER, and PPO. The result is that A2C is the best method for.